Online publication date 22 Apr 2025

Development of a Digital Curation Maturity Model and Indicators Including Weights for Measuring Digital Transformation Outcomes

Abstract

Information management institutions consider digital curation as a crucial area, and achieving digital transformation using the latest technologies is highly important. However, evaluation models for the maturity of digital curation and its continuous development have been scarce. Hence, this study developed a digital curation maturity model and indicators for measuring digital transformation outcomes. Weights were assigned to each indicator to allow for practical application during evaluations. This study identified 16 medium categories under 5 main categories, along with 40 subcategories. It also found 117 indicators and calculated weights for each main category and subcategory. The digital transformation maturity assessment model’s most basic function is to assess the current state of the organization, providing a means for control and suggesting actions for the future. The findings can be used to conduct a systematic evaluation of digital curation maturity, contributing to the continuous development of information management.

Keywords:

Digital Curation, Maturity Model, Open Science, Digital Transformation, Confirmatory Factor Analysis1. Introduction

1.1 Necessity and purpose of the research

From an information management organization perspective, understanding and adopting new technological environments is an essential task, particularly when the technology is linked to the production and distribution of information resources. “Fourth Industrial Revolution” and “data” are said to be the keywords representing recent changes in the technological environment. The importance of technology, data, and information has further heightened because of the COVID-19 pandemic, acting as a catalyst especially in the expansion of information sharing and utilization to the general public.

To address these changes, various global information resource management and service organizations are working toward digital transformation. In 2016, the International Institute for Management (IMD), in its annual World Digital Competitiveness Ranking report, introduced a specialized competitiveness index for digital transformation (IMD World Competitiveness Center, 2021). Furthermore, the German Engineering Federation developed IMPULS Industry 4.0 Readiness, which allows institutions or businesses to evaluate their preparedness for adapting to the Fourth Industrial Revolution. The Singapore government also introduced the Smart Industry Readiness Index (SIRI), which is based on a broad system of classifying processes, technologies, and organizations. The SIRI rates 16 detailed subcategories on a six-point scale to assess digital maturity levels (Singapore Economic Development Board, 2020).

In South Korea, representative information resource management and service organizations that actively respond to digital transformation are the Korea Education and Research Information Service (KERIS), the National Library of Korea, and the Korea Institute of Science and Technology Information (KISTI). The KERIS places emphasis on the need to establish inclusive future education governance and proposes principles to adhere to in the digital transformation of future education (Korea Education and Research Information Service, 2021). The National Library of Korea formulated a three-year plan for digital services with a list of 15 detailed initiatives to address digital transformation (National Library of Korea, 2021).

Meanwhile, the KISTI not only worked at a theoretical level but also actively initiated business process reengineering (BPR) projects for digital transformation in 2021, seeking organizational workflow changes for digital transformation. Its implementation of BPR adopted the concept of a maturity model (National Science and Technology Data Center Content Curation Center, 2020), providing distinctive features. The maturity model is designed to evaluate an organization’s capacity for continuous improvement; higher maturity levels correspond to lower probabilities of issues and greater adaptability to changes for quality improvement. Suitable methods for fostering medium- to long-term transformations include developing a maturity model, planning for changes in the current work environment, and evaluating performance based on outcome indicators. Using a maturity model allows for not only quantitative measurements but also qualitative assessments, making it even more versatile.

However, even if an institution aspiring for digital transformation analyzes and derives improvement measures for each of its departments’ unit tasks, approaches to measure the outcomes of these improvement measures have been inadequate. Simply put, while plans are formulated, there is an absence of methods to assess outcomes.

Therefore, this study aimed to develop a digital curation maturity model and indicators for measuring digital transformation outcomes using a maturity model that assesses an institution’s continuous development. Additionally, each indicator was assigned weights to allow for practical application during evaluations. The concept and scope of digital transformation can vary depending on the application. This study focused on open science-based digital transformation, specifically the continuous development and dissemination of scientific research outcomes.

1.2 Research scope and methods

To achieve this research goal, one must first conduct various case studies and, based on them, construct scales and indicators. Key concepts in the construction of scales and indicators focus on digital transformation and data. With regard to data, considering actual tasks in the field, it is essential to include data quality, research data, open data, and even artificial intelligence (AI) learning data. Research methods for developing the digital curation maturity model and measurement indicators can be broadly categorized into two: The first involves a preliminary model construction based on different case studies and their results, considering the research objectives. The second entails model validation targeting experts, including relevant institutions and academia. These are summarized in Table 1.

The preliminary model was constructed by eliminating redundancy and integrating scales and indicators suggested by different case studies that align with the research objectives. The model developed through the investigation was evaluated through user surveys. The surveys sought to determine the appropriateness of the model’s measurement indicators; for this purpose, confirmatory factor analysis and reliability verification were performed.

Additionally, to derive weights for the measurement indicators of the digital curation maturity model developed in this study, the analytic hierarchy process (AHP) technique was employed. Proposed by Thomas L. Saaty in 1980, AHP is a method for finding solutions to complex decision-making problems. Saaty (1980) suggested a technique for decomposing various options in complex decision-making problems into components, determining these components’ relative priorities, and deciding on the final priorities. Since then, the AHP has been used for decision-making in different fields. The general AHP procedure follows the steps outlined by Saaty (1980), summarized as follows:

- ∙ Step 1: Decompose the decision-making issue into a hierarchical structure.

- ∙ Step 2: Create matrices representing the relations between each layer and its sublayers.

- ∙ Step 3: Assign relative weights to each relation.

- ∙ Step 4: Evaluate the relative scores for each alternative based on how well they satisfy the criteria.

- ∙ Step 5: Aggregate the computed scores to comprehensively estimate the value of each alternative.

Because this study focused on deriving weights for existing models from research, it skipped step 1 and proceeded with the steps outlined in Table 2:

2. Literature analysis

Studies on the evaluation of digital curation maturity have been scant, but those aiming to create evaluation indicators for similar concepts can be found in various fields. The following sections summarize these studies according to their fields.

2.1 Digital transformation and maturity assessment

Digital transformation involves a complete change in an organization’s working methods, organizational culture, and more based on digital technologies through data utilization (Park & Cho, 2021). Digital transformation transcends digitization, which focuses on the conversion of analog data into digital data, and digitalization, which involves the use of information technology (IT) in business operations and processes based on digital data. Digital transformation represents a more advanced concept that revolutionizes an organization’s overall culture, working methods, and thinking processes around digital technologies.

In the digital transformation context, digital maturity is crucial when evaluating an organization’s adaptation or readiness level for the digital business environment. The concept of digital maturity has evolved from its original role in the field of information systems and software development, which was the assessment of an organization’s holistic management capabilities affecting quality. Recent studies have actively examined digital transformation maturity evaluation models, and the term is now used to signify an organization’s systematic preparation for consistently adapting to digital change (Heo & Cheon, 2021).

Therefore, existing research must be reviewed to achieve the main goal of this study, which is to construct a model for evaluating the level of digital transformation maturity in the context of content curation systems. Such analysis will help collect foundational information for the assessment framework and extract insights to organize considerations for model development. This study initially examined recent maturity assessment models related to digital transformation and proceeded to investigate general quality assessment models, service quality assessment models, process quality assessment models, and data-centric quality assessment models spanning open data, research data, and AI data.

2.2 Digital transformation assessment model

Research perspectives on the diagnosis and measurement of digital transformation maturity indicators can be categorized into (1) macroscopic, top-down and (2) microscopic, bottom-up. Studies adopting the top-down perspective are primarily conducted at the national level, defining industries associated with the digital economy and investigating metrics such as sales, employment, and research and development investments of these industries. It also involves the evaluation of broad indicators such as a country’s overall digital accessibility, technological and human resource capabilities, institutional regulations, and social trust. Conversely, studies adopting the bottom-up perspective include cases where institutions (or companies) develop models for assessing digital transformation at an organizational or private unit level.

The IMD publishes its World Digital Competitiveness Ranking, focusing on major categories such as knowledge, technology, and future readiness, with a central framework consisting of 52 evaluation criteria (IMD World Competitiveness Center, 2021). Since 2002, the World Economic Forum has been publishing its Network Readiness Index (NRI), which evaluates the digital capacities of countries and consists of 60 detailed indicators classified under technology, people, governance, and impact. The Organization for Economic Co-operation and Development (OECD) also introduced a comprehensive digital policy framework for assessing national-level digital development status using 33 digital transformation measurement indicators categorized into seven areas: access, use, innovation, jobs, society, trust, and market openness. The SIRI, which was developed by the Singapore government, is based on the IMPULS Foundation’s Industry 4.0 Readiness in Germany and evaluates digital maturity level through 16 detailed classification items under process, technology, and organization categories (Singapore Economic Development Board, 2020).

One notable model in the realm of evaluating institutional (or corporate) and private-unit-level digital transformation is the IMPULS Industry 4.0 Readiness developed by the German Engineering Federation (IMPULS, n.d.). This model rates institutions’ or companies’ readiness in adapting to the Fourth Industrial Revolution. It has six categories: strategy and organization, smart factories, smart operations, smart manufacturing, data-driven services, and employees. Its 28 questions allow readiness levels to be determined across six stages. Gartner, a prominent IT research company in the United States, developed a public sector-focused digital transformation assessment tool that contains seven key indicators: vision and strategy, service delivery and quality, organization, organizational readiness, digital projects and investments, the CIO’s role, and data and analytics. The World Bank’s open data quality measurement model focuses on evaluating leadership, open data ecosystems, policy and legal frameworks, organizational responsibility structures within the government, government data, finance, national technology infrastructure, and citizen engagement.

In Korea, studies have examined the development of digital maturity models for digital transformation. The Korea Institute of Public Administration (2021) developed a digital transformation index model to measure digital transformation levels in the public sector, which consists of connectivity, automation, virtualization, and data-based indices. According to Heo and Cheon (2021), the digital maturity model comprises four dimensions: technological readiness, strategic readiness, organizational culture, and human resource readiness. Hong, Choi and Kim (2019) have constructed a digital transformation capability assessment model that is suitable for the domestic context, rating 32 detailed measurement indicators focusing on technological and organizational capabilities.

2.3 Data quality evaluation model

ISO 8000 defines data quality as the value of information assets that enhance business efficiency and support strategic decision-making by providing suitable and accurate data promptly, securely, and consistently (International Organization for Standardization [ISO], 2016, 2022). An organization’s data quality management is a crucial aspect linked to its overall value. Therefore, before configuring indicators for the digital transformation maturity model, we aimed to construct a preliminary model based on a review of data quality measurement models.

ISO/IEC 9126 measures data quality through factors such as functionality, reliability, usability, efficiency, maintainability, and portability. ISO/IEC 25012 further advances these elements by suggesting 15 quality measurement factors: accuracy, completeness, consistency, reliability, currency, accessibility, compliance, confidentiality, efficiency, precision, traceability, understandability, usefulness, portability, and recoverability (International Organization for Standardization [ISO], 2001, 2008).

As a case for measuring open data and public data quality, the National Information Society Agency of Korea (NIA) uses seven indicators: readiness, completeness, consistency, accuracy, security, timeliness, and usefulness (NIA, 2018). Tim Berners-Lee’s five-star open data is also a representative quality measurement metric. The Research Data Alliance’s FAIR Data Maturity Model contains indicators for searchability, accessibility, interoperability, and reusability to set common evaluation criteria for research data (RDA FAIR Data Maturity Model WG, 2020).

With the increasing interest in AI data, many studies have investigated quality management requirements for such data. According to the Telecommunications Technology Association, quality measurement indicators may include diversity, comprehensiveness, volatility, reliability of sources, factuality, standard compliance, statistical sufficiency, statistical uniformity, suitability, and label accuracy (Telecommunications Technology Association, 2021).

The NIA, in its AI Data Quality Management Guidelines, incorporated the opinions of various stakeholders to measure AI data quality through 10 indicators: readiness, completeness, usefulness, standard compliance, statistical diversity, semantic accuracy, syntactic accuracy, algorithmic adequacy, and validity (NIA, 2021; 2022). Shin (2021) proposed criteria for verifying AI training data in terms of diversity, syntactic accuracy, semantic accuracy, and validity. Additionally, according to Kim and Lim (2020), quality management items for AI training data include diversity, reliability, fairness, sufficiency, uniformity, factuality, suitability of annotation for functional purposes, clarity of object classification, comprehensiveness of annotation attribute information, and effectiveness of learning.

Research on data quality management systems or processes assumes that an organization’s data quality management occurs not as a singular act at a specific point in time but as an integral part of the overall process. According to ISO 9001, which focuses on quality maintenance and assessment, a continuous plan-do-check-act (PDCA) cycle allows for ongoing business improvement and quality management. Building upon ISO 9001, ISO 8000-61 presents 20 quality management processes based on the cyclic structure of quality planning, quality control, quality assurance, and continuous quality improvement.

Capability maturity model integration (CMMI) is a model that conducts holistic assessments in process management, project management, engineering, and support. The Korea Institute of Information and Communication Technology Promotion developed the PCL quality management maturity model with reference to the plan-build-operate-utilize cycle and proposed the ACL maturity level assessment model to assess the capability levels of activities that constitute processes in subsequent research. Additionally, guidelines were provided to select suitable metrics and apply them during the life cycle of AI training data, encompassing planning, data acquisition, data refinement, data labeling, and data training processes.

In reviewing digital transformation maturity models at the national or institutional (private) level, quality management systems or models from a data perspective, and those in general software or service domains, organizations measure maturity levels by crucially utilizing evaluation factors associated with technical aspects, human resources, and governance. National-level evaluations address the societal and economic impact of digital transformation and highlight the link between digital maturity and a country’s social and economic competitiveness. Moreover, existing maturity assessment models lack a dedicated provision for a thorough evaluation of the ‘data’ itself, which is a core management focus for organizations. Therefore, this study addresses the limitations of existing models by considering the addition of evaluation factors for the data itself and factors that assess institutional-level societal impact.

3. Preliminary model configuration for digital curation maturity assessment

The previous section conducted a comprehensive review that encompasses literature up to the digital maturity stage, including digital transformation evaluation models, data quality measurement models, and cases of quality management process models. This analysis revealed different measurement items and scales as well as instances in which different names were used for the same meaning. Therefore, before constructing the preliminary model, this study reorganized the reviewed cases to differentiate scales and indicators and selected scales and indicators to be used in the model by refining and integrating duplicate elements. Table 3 outlines this process.

3.1 Assignment of literature identification numbers

To organize the extensive results of the case studies, the first task was the assignment of identification numbers to each piece of literature. This was performed not only to organize but also to facilitate source verification when constructing elements such as scales or indicators in the future. This task entailed a distinction between cases of digital transformation, data quality measurement, data quality management stages, and maturity stages.

3.2 Criteria setting

The second stage involves setting criteria for designing the preliminary model. In other studies, indicators mostly followed a three-tier structure consisting of major, sub, and minor levels with diverse scopes and depths. To address this, criteria were established to merge similar items and perform consistent mapping. This was based on IMPULS Industry 4.0 from Germany, which is the most referenced model among studies on digital transformation maturity levels. The measurement categories presented in this model include (1) strategy and organization, (2) smart factory, (3) smart operations, (4) smart products, (5) data-based services, and (6) personnel. In the case of strategy and organization, the concept of strategy for digital transformation and the organization’s strategy were distinguished because other models often separate them and define them under different classification criteria. Specifically, organizations were frequently addressed separately, often in conjunction with personnel and other aspects. Therefore, the organization was a distinct category within the strategy and organization component.

With regard to smart factory, smart operations, smart products, and data-based services, the content included in other indicators were technology related and therefore integrated and changed into the technology category.

- ∙ Strategy and organization → Strategy

- ∙ Smart factory, smart operations, smart products, data-based services → Technology

- ∙ Personnel, strategy and organization → Organization (personnel)

Through this process, the major categories were organized into strategy, technology, and organization (personnel). An additional major category involved the data items under management, which were the main focus of this study.

3.3 Element organization according to criteria

Based on the major categories established in the previous stage—strategy, technology, organization (personnel), and data—the elements derived from each case were mapped and grouped into middle categories and subcategories. In this grouping process, the names of elements, along with their definitions, indicators, and measurement methods, were examined. Similarities were used as criteria to map and group elements that were identical or similar. A merging process was also undertaken for elements with nearly identical names and definitions.

Finally, elements outside the four major categories (e.g., economic influence in the NRI, contribution to quality of life) were classified as miscellaneous items, resulting in a total of five main categories: strategy, technology, data, organization (personnel), and miscellaneous.

3.4 Duplicate and integration refinement

Duplicates were removed and elements were integrated and refined according to the selected main categories. Because the previous steps involved grouping elements based on their names, descriptions, and indicators, this stage focused on verifying whether mapping and refinement had been appropriate.

Specifically, the task involved integrating or creating new categories for main, middle, and subclassifications. “(Social) impact” was ultimately modified to be included in the miscellaneous category. While the concept of (social) impact may not be easily visible in a microlevel assessment of organizational maturity, it is a significant factor at the macro level, especially in measuring the extent of national-level digital transformation. (Social) impact measures the degree to which a country’s digital maturity level contributes to the lives of its citizens. While it might be challenging to observe in a micro-level assessment of organizational maturity, considering the economic, educational, and environmental impact on individuals, organizations, and society, based on the core “data” handled by the organization, it was deemed an important evaluation factor in measuring the organization’s digital transformation maturity.

Through the aforementioned process, indicators were mapped to construct the preliminary model. Table 4 shows that the focus was on the main categories of technology, data, strategy, organization (personnel), and (social) impact. Middle classification and subclassification were then conducted. To ensure the traceability of the content of all indicators, each indicator was assigned a source identification number based on its minor classification, and the final preliminary model was established.

Assignment of literature identification numbers for each case study subject for constructing a preliminary model

3.5 Final configuration of the preliminary model

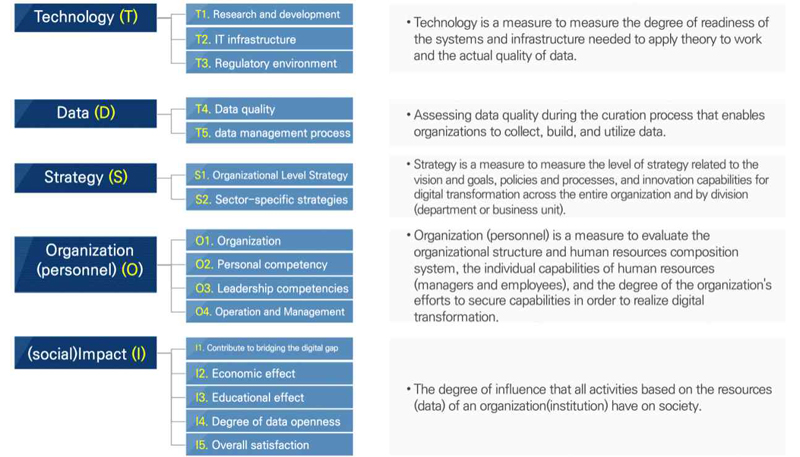

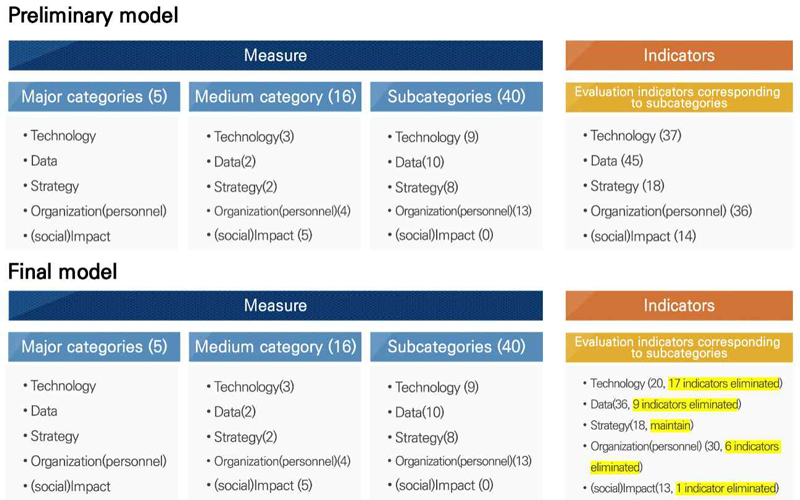

After reviewing the cases, setting the criteria, selecting elements, modifying and merging indicators, and undergoing the refinement process, the final preliminary model for measuring digital transformation maturity is created, shown in Figure 1. The evaluation scale consists of 5 main categories, 16 middle categories, and 40 subcategories. Each subcategory contains 37 evaluation indicators for technology, 45 for data, 18 for strategy, 36 for organization (personnel), and 14 for (social) impact.

The classification system for the preliminary model, including its main and middle categories along with definitions for the former, is illustrated in Figure 2.

4. Model verification and final model derivation

With regard to the preliminary digital maturity evaluation model derived in this study, it is crucial to confirm whether the conceptual constructs and measurement indicators accurately represent the concepts. To this end, this study performed confirmatory factor analysis to assess convergent and discriminant validity. Convergent validity assumes that if multiple measurement indicators are used to measure a single conceptual construct, various measurement indicators should be highly correlated (Noh, 2019). Meanwhile, discriminant validity assumes that if different conceptual constructs are measured through multiple measurement indicators, their correlation should be low. This validity verification was conducted in three stages:

- ∙ Step 1: Critical ratio (CR) values were confirmed based on unstandardized λ values, ensuring that they are above 1.96 (p < 0.05).

- ∙ Step 2: Convergent validity was verified using the three criteria below, and measurement indicators that did not exceed the standardized λ value criteria were removed for further validation:

∘ Standardized λ values exceeding 0.7: This study used a threshold of 0.7 as it is required in some specialized areas, while general social science research often uses 0.5 as a criterion (Noh, 2019; Yu, 2012).

∘ Checking if average variance extracted (AVE) values are above 0.5.

∘ Confirming that composite reliability (CR) values are above 0.7. - ∙ Step 3: Discriminant validity was verified through two processes:

∘ Checking if AVE values are greater than the square of the correlation coefficient.

∘ Confirming that there is no “1” within the (correlation coefficient ± 2*standard error) range.

However, in step 3, even though conceptual constructs were deemed independent in other studies, in cases where they significantly influence each other, especially with a high correlation, instead of excluding or integrating conceptual constructs, it is more appropriate to present studies that used them as independent concepts. Decisions were made after a careful consideration of their relevance in this study (Yu, 2012).

The confirmatory factor analysis survey was conducted online using Google Forms and SurveyMonkey from August 16 to September 13, 2022, targeting individuals who performed data management tasks in research institutions, universities, public agencies, and businesses. Survey participants were selected via snowball sampling starting with the internal staff at the KISTI and expanding through continuous recommendations from relevant agency personnel. A total of 134 responses was obtained, and after excluding 40 responses with incomplete or discontinued answers during the survey, the valid responses amounted to 94. To understand the respondents’ demographics, this study collected additional information on their affiliated organizations, highest education level, and years of work experience (Table 6).

About 65% of the respondents worked in public institutions and research organizations, with 78% holding a master’s or doctoral degree and 57% having more than 11 years of work experience. Cronbach’s alpha was used to verify the internal consistency and reliability of the survey results, showing values above 0.7 in all categories: technology, data, organization, strategy, and social influence. The lowest Cronbach’s alpha coefficient (0.752) was reported in the technology category for T1-1 (R&D investment sector), while the highest coefficient (0.929) was in the data category for D2-2 (readiness sector). Moreover, removing specific items resulted in lower Cronbach’s alpha coefficients, reinforcing internal consistency. Table 7 shows examples of Cronbach’s alpha values in this study.

4.1 Confirmatory factor analysis of the technology category

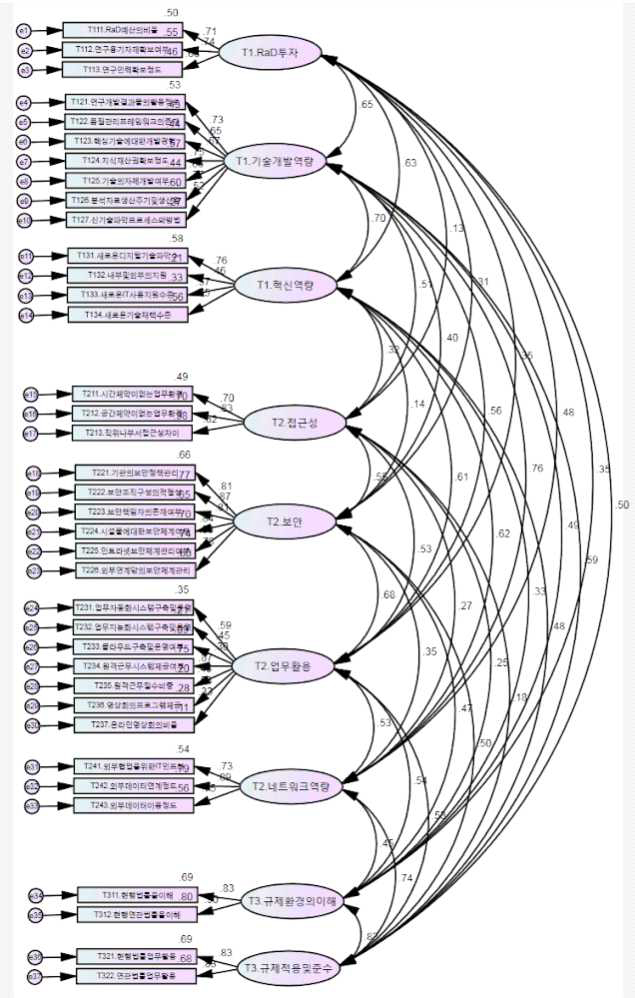

The technology category contained a total of 37 measurement indicators across 9 middle categories. AMOS 22 was used to construct a confirmatory factor analysis model for these (Figure 3).

During the first stage of validation, a comparison of unstandardized λ values, standard errors (SEs), and statistics including p-values within the technology category revealed that, based on the criterion of unstandardized λ values, all critical ratio (CR) values exceeded 1.96 (p < 0.05). Thus, all values met the criteria for the first stage.

Regarding content validity verification, the first step excluded measurement indicators with standardized λ values below 0.7. In addition, 15 measurement indicators were challenging to exclude because doing so would leave only one measurement indicator for accessibility and task utilization latent variables. This not only hindered the assessment of the relative importance of this singular indicator but also presented a statistical challenge in confirming discriminant validity. To address this, a further two measurement indicators within the accessibility and task utilization latent variables were excluded. Table 8 shows detailed information.

Furthermore, AVE values and composite reliability (CR) values were examined, and the results showed that both were above 0.5 and 0.7, respectively, in all areas, confirming convergent validity. Table 9 provides detailed numerical values.

Statistics and average variance extracted (AVE) and composite reliability (CR) values to verify the central validity of the technical scale

The third step confirmed discriminant validity through the correlation of measurement indicators among the conceptual constructs and whether the AVE values were greater than the square of the correlation coefficient. Table 10 summarizes the comparison between correlation coefficients (squared) among the conceptual constructs and AVE values. Table 11 presents the results of the (correlation coefficient ± 2*SE) range.

The validation results showed that the square of the correlation coefficients between all conceptual structures was above the lower limit of the AVE values. However, the (correlation coefficient ± 2*SE) range of T3. Understanding regulatory environment <--> T3. Regulatory application and compliance included 1. Measurement indicators related to regulations were extracted from the IMD World Competitiveness Center (2021) and Portulans Institute (2021) models. Although these indicators were initially divided into understanding and applying the regulatory environment for subcategories, discriminant validity was not confirmed. The term “regulatory environment” was integrated because it was also used in other studies. Table 12 presents the intermediate classifications and final measurement indicators for the technology category after confirmatory factor analysis.

4.2 Confirmatory factor analysis of the data category

The data category had a total of 45 measurement indicators distributed across 10 subcategories. Table 13 shows the unstandardized λ values, SEs, p-values, and standardized λ values. After calculating the critical ratio (CR) based on the criterion of unstandardized λ values exceeding 1.96 (p < 0.05), and considering standardized λ values above 0.7, a total of 9 measurement indicators with standardized coefficients below 0.7 were excluded, as shaded in Table 13.

After checking the AVE values and conceptual reliability, concentration validity was confirmed in all areas with values of 0.5 and above and 0.7 and above, respectively. However, comparing the squares of the correlation coefficients with the AVE values, except for diversity <=> utility, utility, utility <=> suitability, and all other structural concepts, timeliness <=> readiness, completeness, utility, interoperability <=> utility, security, maintenance, maintenance <=> readiness, completeness, security, completeness <=> utility, in a total of 18 areas, AVE values exceeded the upper limit, indicating inadequate discriminant validity. A reevaluation of the (correlation coefficient ± 2*SE) ranges showed that discriminant validity was not confirmed in 9 areas, including utility <=> timeliness, interoperability, completeness, utility, timeliness <=> readiness, interoperability <=> maintenance, security, maintenance <=> security, completeness <=> security.

Even if verification were conducted within the (correlation coefficient ± 2*SE) range, the extremely high correlation in at least nine areas means that the differences between the structural concepts could not be confirmed statistically. However, a review of these structural concepts shows that even though they are not the same, they may have a high correlation because they are related. For example, interoperability and utility showed a higher correlation coefficient than the threshold. While interoperability and utility are distinct, high interoperability naturally leads to high utility, explaining the high correlation coefficient. In the initial digital maturity assessment model developed in this study, the structural concepts were derived from concepts and metrics used in various fields and studies (utility: ISO/IEC 25012; RDA FAIR Data Maturity Model WG (2020); timeliness: ISO/IEC 9126; ISO/IEC 25012; interoperability: RDA FAIR Data Maturity Model WG (2020); completeness: NIA (2021, 2022); TTAK.KO-10.1339; CMMI (Lanin, 2008); ISO 9001; usefulness: ISO 8000-61; NIA (2021, 2022); TTAK.KO-10.1339; CMMI (Lanin, 2008); ISO 9001; timeliness: ISO/IEC 25012; ISO/IEC 9126; TTAK.KO-10.1339; readiness: NIA (2018, 2021, 2022); ISO 8000-150; CMMI (Lanin, 2008); Kim, Lee & Lee (2017); maintainability: ISO/IEC 9126; security: NIA (2018); ISO/IEC 9126). Therefore, excluding or merging structural concepts based solely on high correlation might cause an evaluation model to fail to assess concepts commonly used in various models.

Furthermore, because these structural concepts are deemed essential in the data category, they were not excluded or merged in this model. Table 14 shows the final subcategories and metrics for the data category.

4.3 Confirmatory factor analysis of the strategy category

The strategy category had a total of 18 measurement indicators distributed across 8 subcategories. Table 15 shows unstandardized λ values, SEs, p-values, and standardized λ values. After confirming that the unstandardized λ values met the critical ratio (CR) condition exceeding 1.96 (p < 0.05), and considering standardized λ values above 0.7, no measurement indicators in the strategy category were specifically excluded because all standardized λ values exceeded 0.7.

After verifying the AVE values and concept reliability, both of which exceeded 0.5 and 0.7, respectively, and ensured satisfactory convergent validity in all areas, a discriminant validity issue was identified when comparing the squares of correlation coefficients with AVE values. Specifically, in the R&D strategy <=> businessization strategy area, only the AVE value exceeded the upper limit, indicating inadequate discriminant validity. However, a reevaluation of the (correlation coefficient ± 2*SE) range showed no issues. Table 16 shows the final middle categories and measurement indicators for the strategy category.

4.4 Confirmatory factor analysis of the organization category

The organization category had a total of 35 measurement indicators distributed across 12 subcategories. Table 17 presents the unstandardized λ values, SEs, p-values, and standardized λ values. After confirming that the unstandardized λ values met the critical ratio (CR) condition exceeding 1.96 (p < 0.05), and considering standardized λ values above 0.7, six measurement indicators in the strategy category were excluded for having standardized λ values below 0.7.

After confirming the AVE values and concept reliability, which were above 0.5 and 0.7, respectively, indicating satisfactory convergent validity in all areas, issues in discriminant validity were identified. Using the criterion of discriminant validity verification within the (correlation coefficient ± 2*SE) range, problems were reported in seven areas: work resilience <=> organizational technical competence, change preparedness, personnel management, task leadership, change preparedness <=> technical management competence, leadership role of management CIO <=> leadership system, talent management.

Despite the high correlation coefficients in these areas, they represent different concepts that have been used in various studies (Heo & Cheon, 2021; Hong, Choi & Kim, 2019; Gartner, n.d.; IMD World Competitiveness Center, 2021; IMPULS, n.d.; Portulans Institute, 2021; Singapore Economic Development Board, 2020). Considering potential issues in the completeness of the evaluation model, this study did not exclude or integrate these areas. Table 18 shows the final subcategories and measurement indicators for the organization category.

4.5 Confirmatory factor analysis of the social influence category

The social influence category had a total of 18 measurement indicators across 5 subcategories. Table 19 shows the unstandardized λ values, SEs, p-values, and standardized λ values. Following the criterion of unstandardized λ values with critical ratios (CR) exceeding 1.96 (p < .05) and considering standardized λ values above 0.7, one measurement indicator with a standardized λ value below 0.7 was identified and subsequently excluded from the social influence category.

After confirming the AVE values and concept reliability, all areas were confirmed to have achieved concentration validity, with values exceeding 0.5 and 0.7, respectively. Additionally, a comparison of the squares of the correlation coefficients with the AVE values showed that the AVE values in all areas were below the lower limit, indicating appropriate discriminant validity. Table 20 outlines the final middle categories and measurement indicators for the social influence category.

5. AHP analysis for weighting digital curation maturity model indicators

This study employed the AHP technique to derive relative weights for elements within the main and middle categories of the model, aiming for its objective use. Specific steps in AHP, such as the detailed scoring and feedback phases, were excluded as they were deemed inappropriate; instead, the focus was on general procedures, particularly weighting and consistency testing.

5.1 Survey questionnaire design

To measure weights within the main and middle categories of the digital maturity assessment model in line with the research objectives, a questionnaire was developed to allow individual evaluators to perform pairwise comparisons that indicate the relative importance of or preference between classification items. A total of 31 questions were presented, consisting of 10 questions for the 5 categories within the major classification and 21 questions for the 16 categories within the subclassification. The survey questions pertained to the relative importance of the major and subcategories outlined in the digital transformation maturity model as illustrated in Figure 4. Respondents were instructed to rate the relative importance of two items for each question as “very important,” “important,” “similar,” “not important,” or “not very important.”

The survey was conducted using the SurveyMonkey platform, a professional survey service, from September 22 to 26, 2022. Its respondents were the participants in the confirmatory factor analysis survey. A total of 48 individuals responded. Tables 21 to 23 provide details regarding the demographic distribution of respondents according to affiliation type, highest educational attainment, and years of work experience.

Among the respondents, 46% were affiliated with research institutions, representing an absolute majority. Including users who belonged to public institutions, this figure increased to 76%. In terms of the highest educational attainment, master’s degrees accounted for the largest proportion at 60%, and when doctoral degrees were included, it amounted to 92%. Additionally, although no significant variations were observed in the respondents’ work experience, the range of 11-20 years constituted the largest proportion at 49%. Overall, 69% of the respondents had more than 11 years of work experience.

5.2 Weight measurement

To measure the weights, the opinions of all respondents regarding each response must be consolidated. To this end, this study applied the geometric mean method, which calculates the geometric mean of responses from participants satisfying all consistency indices for each response item. The resulting geometric mean is then considered the overall opinion of all respondents, making it a frequently used method when universally assuming respondents’ expertise (Yoo, 2012).

5.3 Consistency verification

A consistency check was conducted after the weights were determined. Despite the simplicity of AHP in performing pairwise comparisons, a consistency check is essential for precise results. Hence, this study used a nonstandard symmetric matrix and the multiplication of matrices between the weights and the average of those results to derive λ. Consistency was subsequently verified through the consistency index (CI) and the consistency ratio (CR). If the CR value is below 0.1, the responses to matrix A are considered logically consistent (Choi, 2020). In this study, the λ value was 5.07254333, the CI value was 0.01813583, and the CR value was 0.01619271, with n = 5 (a situation with a matrix of size 5). The random index (RI) value (an average CI value for 100 randomly generated symmetric matrices for a given size n) was 1.12. Since the CR value was below 0.1, consistency was confirmed. Table 25 shows the detailed numerical values.

In the same way, weights were derived for the middle categories within each main category.

5.4 Result of deriving weights for the main and middle categories

Above, the measured and validated weights were organized based on major and subcategories, and final weights were derived by multiplying the weights of the major categories by those of the middle categories.

A comprehensive weight synthesis among major categories showed that the technology category was the most dominant, and within middle categories, research and development within the technology category was the most dominant. However, the final weight derivation results showed that the middle category with the highest weight was data quality in the data category with a weight exceeding 0.16, followed by research and development in the technology category (0.12591379) and organizational strategy level in the strategy category (0.11704421) as dominant subcategory elements.

6. Discussion

Although the validation process involved the participation of multiple experts, it does not guarantee the suitability of the results as a model for measuring digital transformation for several reasons. First, the professional criteria that the experts used to derive indicators and weights were difficult to standardize and had variable characteristics. Hence, further discussions must be conducted to determine whether factors such as workplace, tenure, field of work, and education can be considered clear criteria for expertise.

Second, the results validated through statistical devices might not adopt certain elements as indicators; nevertheless, these elements may still be considered valid in specific situations. Different results may be possible depending on the expert.

Lastly, although this study adopted AHP for weight assignment through pairwise comparisons, this approach is characterized by its ability to generate a large number of pairwise comparison items as the number of comparison subjects increases. Therefore, this study derived weights up to a mid-level classification. To enhance the model’s practical utility, weight derivation must be extended to detailed items. Considering this, it might be worthwhile to explore options such as reducing the number of items, which may be performed by incorporating a high-order equation technique based on confirmatory factor analysis data rather than a direct application of AHP.

7. Conclusion and recommendation

The most fundamental function of the digital transformation maturity assessment model is to evaluate the current state of an organization, providing a means for control and suggesting actions for the future. It can serve as a framework for enhancing awareness and improvement in the analytical aspect, ensuring quality and reducing errors in the organization’s key resources and services.

The model’s significance rests in its reflection of different data types and data management processes and adoption of a digital transformation perspective. In addition, its evaluation criteria were structured to allow for both quantitative and qualitative assessments. To help organizations establish evaluation criteria, the model categorizes them into technology, data, strategy, organization, and influence, making it possible to utilize them by sector. Importance is assigned to each criterion, which guides organizations in structuring evaluation items. The model is also meaningful in that it investigates several cases to derive its construction indicators. The derivation of weights for practical application also adds significance to the model.

After a careful consideration of the weight results, when evaluating the maturity of a digital curation institution from a digital transformation perspective, technological advancement is the most crucial factor, closely followed by data. Within the technological domain, this study emphasizes that the strength of research and development is more critical than the current state of IT infrastructure, reflecting expert insights into what is inherently crucial for technological advancement and highlighting the importance of technical investments.

In addition, data quality management significantly influences maturity assessment, highlighting the importance of not only having data but also ensuring well-managed and usable data.

Moreover, an organization’s overall strategic responsiveness has a significant impact on maturity. Within the organizational domain, an organization’s composition carries more weight than individual and leadership capabilities. This suggests that, for an optimal utilization of individual capabilities in digital curation maturity, organizational attention and structuring must focus on how the organization is configured.

With regard to social influence, this study revealed a prioritization of fundamental aspects such as addressing digital disparities and economic effects over systemic satisfaction, underscoring the significance of excelling in essential digital curation functions rather than merely meeting surface-level conditions for a positive impact on maturity assessment.

Digital transformation goals are challenging and cannot be easily attained within a short period. Moreover, the factors necessary for accomplishment are diverse and subject to change based on technological advancements and societal factors. The AHP analysis results in this paper shed light on what must be considered crucial when focusing on digital curation in institutions that manage and service digital knowledge information resources, especially in the digital transformation context.

Although AHP results are not absolute standards, this study indicates that technology and data are currently more critical to digital transformation than nontechnical factors, reflecting the perspectives of institutions that prepare and implement digital transformation. Despite the importance of balanced investments and execution across all factors, the results show that addressing challenges in technical aspects should take precedence.

In addition, while digital transformation is a crucial global challenge, identifying prominent success stories remains difficult. The models and metrics derived from this study must be tested in the field to determine their practical applicability for direct measurement. Furthermore, future studies must develop and refine new indicators tailored to each institution’s objectives. Finally, the weights for each area and question must be investigated for the practical and rational use of this maturity model in the future.

Acknowledgments

This research was supported by the 2022 Basic Project of the Korea Institute of Science and Technology Information (KISTI) titled “Establishment of an Intelligent Science and Technology Information Curation System” (Project Number: K-22-L01-C01-S01).

References

-

Choi, M. C. (2020). Evaluation of analytic hierarchy process method and development of a weight modified model. Management & Information Systems Review, 39(2), 145-162.

[https://doi.org/10.29214/damis.2020.39.2.009]

- Data Observation Network for Earth (n.d.). DataONE best practices primer for data package creators. https://repository.oceanbestpractices.org/bitstream/handle/11329/502/DataONE_BP_Primer_020212.pdf

- Gartner. (n.d.). Digital Government Maturity. Retrieved April 21, 2025, from https://surveys.gartner.com/s/DigitalGovernmentMaturity

-

Heo, M., & Cheon, M. (2021). A Study on the Digital Transformation Readiness Through Developing and Applying Digital Maturity Diagnosis Model: Focused on the Case of a S Company in Oil and Chemical Industry. Korean Management Review, 50(1), 81-114.

[https://doi.org/10.17287/kmr.2021.50.1.81]

- Hong, S., Choi, Y., & Kim, G. (2019). A Study of Development of Digital Transformation Capacity. Journal of The Korea Society of Information Technology Policy & Management, 11(5), 1371-1381.

- IMD World Competitiveness Center. (2021). IMD World Digital Competitiveness Ranking 2021. Retrieved April 22, 2025, from https://investchile.gob.cl/wp-content/uploads/2022/03/imd-world-digital-competitiveness-rankings-2021.pdf

- IMPULS. (n.d.). IMPULS Industrie 4.0-Readiness Online-Selbst-Check für Unternehmen. Retrieved April 21, 2025, from https://www.iwconsult.de/projekte/industrie-40-readiness/

- International Organization for Standardization & International Electrotechnical Commission. (2001). Software engineering — Product quality — Part 1: Quality model (ISO/IEC 9126-1:2001).

- International Organization for Standardization & International Electrotechnical Commission. (2008). Software engineering — Software product Quality Requirements and Evaluation (SQuaRE) — Data quality model (ISO/IEC 25012:2008).

- International Organization for Standardization. (2016). Data quality — Part 61: Data quality management: Process reference model (ISO 8000-61:2016).

- International Organization for Standardization. (2022). Data quality — Part 150: Data quality management: Roles and responsibilities. (ISO 8000-150:2022).

- Jung, H. (2007). A Study of the Data Quality Evaluation. Journal of Internet Computing and Services, 8(4), 119-128.

- Kim, M., & Kim, M. (2020). Data quality control for data dams. TTA Journal, 192, 34-40.

- Korea Education and Research Information Service. (2021). A plan to establish inclusive future education governance in response to digital transformation. Daegu Metropolitan City: Korea Education and Research Information Service.

- Korea Institute of Public Administration. (2021). Development and Utilization of Digital Level Diagnosis Model in Public Sector. Seoul: Korea Institute of Public Administration.

- Lanin, I. (2008). Capability Maturity Model Integration (CMMI). Retrieved April 21, 2025, from https://pt.slideshare.net/ivanlanin/capability-maturity-model-integrity-cmmi/6

- National Information Society Agency. (2018). Public Data Quality Management Manual v2.0. Daegu Metropolitan City: Korea Information Society Agency.

- National Information Society Agency. (2021). Data Quality Management Guidelines for AI Training v1.0. Retrieved April 21, 2025, from https://aihub.or.kr/aihubnews/qlityguidance/view.do?pageIndex=1&nttSn=10041&currMenu=&topMenu=&searchCondition=&searchKeyword=

- National Information Society Agency. (2022). Data Quality Management Guidelines for AI Training v2.0. Retrieved April 21, 2025, from https://aihub.or.kr/aihubnews/qlityguidance/view.do?currMenu=131&topMenu=103&nttSn=9831

- National Library of Korea. (2021, September 28). A national library leading the digital transformation. https://www.nl.go.kr/NL/contents/N50603000000.do?schM=view&id=40107&schBcid=normal0302

- National Science and Technology Data Center Content Curation Center. (2020). Establishment of science and technology content curation system. Daejeon: Korea Institute of Science and Technology Information. Retrieved April 21, 2025, from https://repository.kisti.re.kr/handle/10580/17347

- Noh, K. (2019). The proper methods of statistical analysis for dissertation. Seoul: Hanbit Academy.

-

OECD. (2019). Measuring the Digital Transformation: A ROADMAP FOR THE FUTURE. Retrieved April 22, 2025, from https://www.oecd.org/en/publications/measuring-the-digital-transformation_9789264311992-en.html

[https://doi.org/10.1787/9789264311992-en]

- Parasuraman, A., Zeithaml, V. A., & Berry, L. L. (1988). SERVQUAL: A multiple-item scale for measuring consumer perceptions of service quality. Journal of Retailing, 64(1), 12-40.

- Park, S., & Cho, K. (2021). The successful start of digital transformation. Samsung SDS Insight Report. Retrieved April 21, 2025, from https://www.samsungsds.com/kr/insights/dta.html

- Portulans Institute. (2021, December 2). Network Readiness Index 2021. Retrieved April 21, 2025, from https://networkreadinessindex.org/nri-2021-edition-press-release/

- Principe, P., Manghi, P., Bardi, A., Vieira, A., Schirrwagen, J., & Pierrakos, D. (2019). A user journey in OpenAIRE services through the lens of repository managers. Retrieved April 21, 2025, from https://repositorium.sdum.uminho.pt/bitstream/1822/60527/3/OpenAIRE_OR2019_workshop_2nd_all.pdf

- RDA FAIR Data Maturity Model WG. (2020). FAIR Data Maturity Model: Specification and Guidelines. Retrieved April 21, 2025, from http://www.rd-alliance.org/groups/fair-data-maturity-model-wg

-

Rhee, G., Yurb, P., & Ryoo, S. Y. (2020). Performance Measurement Model for Open Big Data Platform. Knowledge Management Research, 21(4), 243-263.

[https://doi.org/10.15813/kmr.2020.21.4.013]

- Saaty, T. L. (1980). The Analytic Hierarchy Process: Planning, Priority Setting, Resource Allocation. Virginia: McGraw-Hill International Book Company.

- Shin, J. (2021). Data quality verification method for artificial intelligence learning. Journal of the Electronic Engineering Society, 48(7), 28-34.

- Singapore Economic Development Board. (2020). The Smart Industry Readiness Index. Retrieved April 21, 2025, from https://www.edb.gov.sg/en/about-edb/media-releases-publications/advanced-manufacturing-release.html

-

Stuart, D., Baynes, G., Hrynaszkiewicz, I., Allin, K., Penny, D., Lucraft, M., & Astell, M. (2018). Whitepaper: Practical challenges for researchers in data sharing (Version 1). figshare.

[https://doi.org/10.6084/m9.figshare.5975011.v1]

- Telecommunications Technology Association. (2021). Data quality management requirements for supervised learning (TTAK.KO-10.1339:2021).

-

Yoo, S. (2012). A study on evaluation model of business process management systems based on analytical hierarchy process. Management & Information Systems Review, 31(4), 433-444.

[https://doi.org/10.29214/damis.2012.31.4.018]

- Yu, J. (2012). Professor Jong-pil Yu’s concept and understanding of structural equations. Seoul: Hannarae Publishing House.

Seonghun Kim is an Affiliate Professor of Sungkyunkwan University iSchool: LIS and Data Science, Seoul, The Republic of Korea. He teaches courses on web databases, system design and information retrieval. His main research interests are deep learning and LLM-based recommendation systems, and he is researching academic paper recommendation systems with research support from the National Research Foundation of Korea.

Jinho Park is an Assistant Professor in the Knowledge, Information and Culture Track at Hansung University, South Korea. He teaches courses on databases, data analysis and information retrieval. He is a board member of the Korean Library and Information Society, the Korean Society of Records Management, and the Korean Library Association, and an expert member of ISO/TC46 Korea. He has led the informatics department of the National Digital Library of Korea and the Arts Information Centre of the Korea National University of Arts. His main research interest is open data. His research focuses on building new information ecosystems and management and service systems through data openness, including open data quality assessment.