A Study on the Reliability Evaluation Index Development for the Information Resources Retained by Institutions: Focusing on Humanities Assets

Abstract

This study has the aim of developing an evaluation index that can help evaluate the reliability of the information resources of institutions retaining humanities assets for the purposes of laying out the foundation for providing one-stop portal service for humanities assets. To this end, the evaluation index was derived through the analysis of previous research, case studies, and interviews with experts, the derived evaluation index was then applied to the humanities assets retaining institutions to verify the utility. The institutional information resources’ reliability evaluation index consisted of the two dimensions of the institutions’ own reliability evaluation index. The institution provided a service and system evaluation index. The institutions’ own reliability evaluation index consisted of 25 points for institutional authority, 25 points for data collection and construction, 30 points for data provision, and 20 points for appropriateness of data, for a total of 100 points, respectively. The institution provided service and system evaluation indexes consisting of 25 points for information quality, 15 points for appropriateness (decency), 15 points for accessibility, 20 points for tangibility, 15 points for form, and 10 points for cooperation, for the total of 100 points, respectively. The derived evaluation index was used to evaluate the utility of 6 institutions representing humanities assets through application. Consequently, the reliability of the information resources retained by the Research Information Service System (RISS) of the Korea Education & Research Information Service (KERIS) turned out to be the highest.

Keywords:

Humanities Assets, Evaluation Index, Information Resources, Reliability, Humanities Assets Evaluation1. Introduction

With the mass production of information resources along with the development of information technology, distribution of information resources has also increasingly become more active. In particular, as for the distribution of information resources, the services rendered through cooperation between information production institutions is vastly growing, and the reliability of the information resources retained by the institutions carrying information resources has turned out to be extremely significant.

The institutions recognizing the importance of information resources have been established to collect, preserve and provide information resources. Yet the question of the reliability of information resources retained by each institution has continuously been raised. In this light, it has become necessary to develop an index that can help to objectively measure the reliability of the information resources retained by each institution.

As a result of reviewing the reliability evaluation index of information resources for the humanities assets where the importance of the humanities has emerged, a considerable portion of the information resource reliability evaluation index up until now has been evaluated as quantitative aspects; such as, the number of SCI papers and recency, among others, with a focus on technology information resources. However, there is a limit to evaluating humanities assets that have a climate of prioritizing appropriateness over the recency of information resources, and published books over journals.

In the era of knowledge information, where changes take place in real time, the various scholarly results are not accumulated based on reliability, thus it is a reality that it will be rejected by users in the end. In this study, we intend to develop evaluation elements for measuring the reliability of academic information collected through various routes and apply them for the continued use of the users. Furthermore, we will attempt to analyze the reliability of individual systems that provide not only individual units of each research achievement currently provided, but also the reliability of the individual systems by articulating and applying components and systems that are one at the core of the theory of reliability.

Therefore, this study has the aim of developing an evaluation index that can help evaluate the reliability of the information resources of institutions retaining humanities assets for the purposes of laying out the foundation for providing one-stop portal service for humanities assets.

2. Literature Review

Reliability is represented in terms of likelihood, which is defined as “the probability under which a system, machine, and component, among others, will perform a given function during the intended period under certain conditions.” While scholars have offered varying thoughts on the concept of reliability, Fogg and Tseng (1999) defined reliability basically as “believability” and “perceived quality.” Perceived quality signifies an attribute that manifests in the aftermath of perceptions by humans beings, not something that is inherent in an object, person, or any information itself. Moreover, reliability is also a perception of the results of evaluating various aspects simultaneously. Scholars’ thoughts on credibility may vary in diverse aspects, yet they may be classified into “trustworthiness” and “expertise;” the two common elements giving rise to questioning whether there is any value in trust. The most important elements of reliability evaluation can be defined in terms such as “well-intentioned,” “truthful,” and “unbiased,” among others, whose aspect of reliability may be taken as an expression of the information sources’ perceived morality or ethics. Meanwhile, the aspect of “expertise” may be explained as “knowledgeable,” “experienced” and “competent,” among others, and this aspect is an expression of the perceived knowledge and technical aspects of information sources (Kim, 2007b, 96-97).

Reliability analysis was founded in the United States and, in the early 1940s, started with the systematization of quality control. It was expressed in various forms such as reliability, survival time, and failure time, among others. Yet, the life span of the analyzed subject was mainly focused on engineering (Yoon, 1996). Starting in the engineering field, reliability analysis has further expanded and developed into various fields such as medicine, insurance, and finance. As systems became increasingly complex and diverse, it has become an important concept for all systems.

2.1 Research on the evaluation of Web information resources

As a study on the reliability evaluation criteria of the Web information resources, Standler (2004) explored “peer review,” “credentials of the author,” and “writing style,” as the 3 methods of traditional evaluation. Whether peer reviews have been published by famous publishers or published in academic journals, among others, this can become an important criterion. External credibility of the author is also evaluated based on whether a doctoral degree was obtained from a reputable university. As for the writing style of the material, it stipulates that the number of citations or footnotes, the extent of typographical or grammatical errors, the appropriateness of vocabulary, internal consistency, the date of the last modification and publication date, are important criteria. However, Standler emphasized that information reliability is not a matter of expert opinion but of the information itself, implying that traditional criteria may vary depending on characteristics of corresponding areas. In addition, he pointed out the need for a reliability evaluation of Web information resources, and also pointed out that it is not sufficient to evaluate the reliability of Web information resources based on the traditional evaluation criteria. Fogg et al. (2002) analyzed factors affecting the reliability of websites in a study of conditions affecting the reliability of websites. As a result of their analysis, they divided and presented factors such as Expertise Factors, Trustworthiness Factors, and Sponsorship Factors, respectively. Expertise factors have a positive effect on the reliability of the web, such as quick responses to customer inquiries, and ease or convenience of the search process, whereas negative factors turned out to be linked to errors and spelling errors, among others. As for trustworthiness factors, useful experiences of the past, contact with site management agencies, and privacy policies were found to have operated as positive factors. As for the sponsorship factors, advertisements on the corresponding websites through other media sites are positive factors, whereas unclear boundaries and popup windows, among others, were presented as negative factors. Other factors, such as website updates and professional design, were positive factors, whereas difficulties in exploration turned out to be negative factors, respectively. Fogg et al. (2003) analyzed the opinions of users related to the reliability of the website, whereas the professional and visual designs were evaluated as the most important factors in the website reliability evaluation. These factors included website design, layout, image, font, margin, and color configurations, among others. In addition, factors of website reliability determination include those involved with structure and focus of the website information, purpose of the website, usefulness and accuracy of information, reputation, bias of information, quality of tone used, the nature of advertisement, the skill of website operators, stability of function, customer service, users/experiences and legibility of the text. Kim studied the reliability of web information sources in Korea, and how the users can evaluate the websites’ reliability (Kim, 2007a), as well as factors affecting website reliability and importance (Kim, 2007b), and setting criteria for evaluating the websites’ reliability (Kim, 2011). In the study on the reliability evaluation method of the website, it was found that users were very passive in determining the reliability of the websites in spite of the high proportion of web information in daily life. The websites with the highest reliability for internet information sources were sports websites. Academic DB’s, news, financial institutions, and government websites also showed relatively high reliability. Included among the main factors of website trust were ‘easy information search’, ‘trust based on past experience’, ‘quick update’, ‘discovery of major facts about the website’, and ‘facility with which to find information sources’. In a study on factors and the importance around how factors affect the reliability of websites, the factors affecting the perceived reliability of web information sources were classified into four categories of expertise; trust factors, advertisement factors and others, Through 49 reliability factors, they analyzed whether the characteristics or elements of the websites make people believe in the information they find online. As a result of the analysis, out of 49 factors, we determined that 29 positive factors such as, update frequency and ease of search, and 20 negative factors such as difficulty of a search and dead links, all came forward as useful information. Furthermore, a study on criteria settings for critical evaluation of online information sources was conducted by analyzing standards and guidelines related to the evaluation of information sources, and guidelines, thereby presenting criteria such as authority, objectivity, quality, coverage, currency, and relevance, among others.

2.2 Study on the evaluation factors of the online subject guides

Reviewing research conducted on evaluation factors for websites of the subject guides, which is the most representative online information source provided by libraries, and others, Dunsmore (2002) draws the key elements of a subject guide through qualitative research on a web- based pathfinder. To this end, Dunsmore surveyed the purpose, concept, and principles of the web based pathfinder or subject guide with 10 business school libraries in the United States and Canada each, for a total of 20 university libraries. As for the subject guide, they investigated components of the Company Guide, Industry Guide, and Marketing Guide. As for the web-based pathfinder’s components, transparency signifying the pathfinder’s purpose, concerns of concept and principle, consistency representing uniformity in the selection and presentation of the subject guide’s title, accessibility in providing paths for reaching the corresponding subject guide from the library’s website, and selectivity providing guidance on the scope of resources provided by the subject guide, among others. Jackson and Pellack (2004), Jackson and Stacy-Bates (2016) analyzed the online subject guide of university libraries. Rebecca and her colleagues sought to identify changes in the subject guide of university libraries through a longitudinal study of university libraries’ subject guides. In 2004, they developed a questionnaire consisting of 10 questions for analysis of subject guides and selected 121 libraries from ALA to analyze four fields including, philosophy, journalism, astronomy and chemistry. Later in 2016, they analyzed the subject guides of chemistry, journalism, and philosophy for 32 university libraries associated with ALA based on questionnaire items that were modified slightly. Compared to the 2004 data, the overall score was quite similar, but the ratio of link testers increased from 54% to 94%, while the percentage of statistics used in the user behavior analysis increased from 67% to 88%, respectively. The subject guide development and link status, recency, value, statistics, and evaluation, among others, were evaluated as the same factors in 2004 and 2016, and the format, contents, and operation aspects reflected the reality and underwent a slight revision to realize evaluation factors. Noh and Jeong (2017) developed the necessary elements to provide web information sources on Korea’s modern literary subject matters provided by the National Library of Korea as an effective service based on the needs of the public. In their final evaluation process, they developed 4 areas including; utility, content, form and mutual cooperation, among others. 23 evaluation factors, and 67 detailed evaluation items and evaluation questions, respectively were included. As for the key evaluation factors- web site trust, user communication, accessibility for subject guides, ease of search for published books, provision of information in a consistent format, and scope of subject guides, among others were evaluated. As a result of applying the developed evaluation factors against the bibliographic system by subject, which is a web information source site provided by the National Library of Korea, 8 out of 20 items of utility (40%), 13 out of 20 items of content (66%), 15 out of 17 items of form (88%), and 1 out of 10 items of mutual cooperation (10%), that is, 37 out of a total of 67 detailed evaluation factors (55.2%) have been provided, respectively.

2.3 Study on the evaluation of the research achievements in the area of humanities

Studies in the area of the humanities were concentrated in the 1970s and 1980s, and have been declining since 2005 (Chung & Choi, 2011). The most important part of the evaluation of research results in the field of the humanities is the recognition of the diversity of research. It was confirmed that the evaluation method varied as per the criteria of university professors at 134 universities across various fields such as, the social sciences, the humanities, and the natural sciences (Centra, 1977). Researchers in the field of the humanities emphasized published books over journals, and regardless of which, they criticized the existing quantitative evaluation and paper centric evaluation methods, calling for improvement in this regard (Finkenstaedt, 1990; Skolnik, 2000). Furthermore, Kim, Lee and Park (2006) argued that evaluation criteria within the humanities should be set on values that are different from those used in evaluating research results within the sciences and engineering, and also argued that if the humanities were to form an evaluation factor reflecting its specificities, there would be a need to devise an evaluation method that divides the field of emphasizing research papers and the field of emphasizing academic books. Moed (2008) developed a matrix for evaluating research productivity in the humanities. In order to reflect the academic specificity in the field of the humanities, he presented the academic activities and research achievements of the researchers in their relevant field. However, sufficient data analysis was needed to serve as a qualitative measure of research achievements in the field of the humanities. Chung and Choi (2011) examined various types of research achievements in the humanities and social sciences on research achievements of humanities and social sciences professors. Along with the basic principles of evaluation, they presented ways of improvement focusing on both domestic and foreign universities by identifying various types of research achievements in the humanities and social sciences.

3. Methods

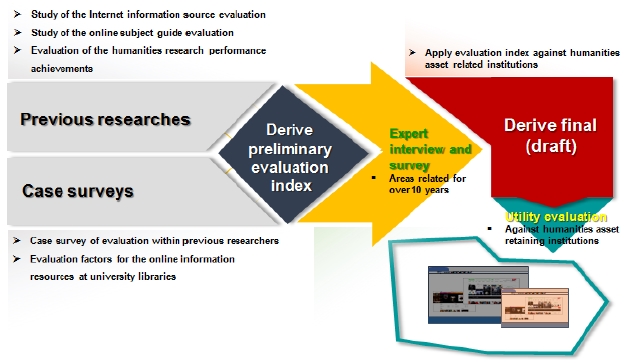

In this study, the following evaluation factors were developed to achieve the purpose of the research. First, we analyzed previous domestic and foreign research using the reliability evaluation. The primary research utilized was based on the evaluation of Internet information sources, the evaluation of the online subject guide evaluation, and research achievements in the humanities. Second, we analyzed the evaluation factors of online information resources provided by university libraries. To examine the reliability of online information resources at university libraries, we acquired factors presented, through which we determined preliminary evaluation factors. Third, based on the collected preliminary evaluation factors, we obtained interviews with 8 doctoral researchers who conducted research in related fields (library and information science, records management, sociology, Korean language and literature, philosophy, and cultural contents) for more than 10 years and thus we derived the final evaluation factors reflecting expert opinions. Fourth, we applied the reliability evaluation index to the institutions retaining humanities assets, respectively.

4. Results

4.1 Analysis of cases and surveys

In this study, we investigated the evaluation items presented by university libraries for online resource evaluation factors for library users.

Reviewing evaluation criteria from online information sources provided by the University of California at Berkeley’s library, there are a total of 6 major categories such as; responsibility, purpose, publication & form, relevance, publication date, and record. Based on the sub-categories and detailed evaluation items, items are provided for individuals to evaluate the online information sources.

Evaluation criteria for online information sources at the University of California at Berkeley’s libraries

As for Johns Hopkins University’s library, it has 6 major categories of author’s matters; accuracy and verifiability, recency, publishing organizations, perspective or propensity, references and related literatures, among others. The author’s matters are regarded as the most important part of the evaluation of information sources, and the evaluation criteria for information sources desired by the users were presented by providing 32 detailed items.

In the case of Georgetown University’s library, evaluation criteria for internet data are divided into 7 categories of responsibility; purpose, objectivity, accuracy, reliability, currency, and link, among others. A total of 31 detailed evaluation criteria are provided to help the users evaluate the reliability of internet data.

In the case of the University of Oregon’s library, the evaluation criteria for online information are provided through a total of 8 items of reliability; formality, validity, perspective, time, references, purpose, and expected readers, among others, while the online information may be evaluated through 16 evaluation criteria such as the reliability of the information contained.

4.2 Derivation of the preliminary evaluation index

In this study, in order to verify the humanities society information relating to public and private institutions’ information reliability, we analyzed the evaluation index of web information sources for subject guides, evaluation criteria of online information sources of university libraries, reliability evaluation factors by researcher, impact factors for website reliability, and an evaluation index for the humanities professors’ research achievements, among others. These were based on what we derived from the preliminary evaluation indexes central to 26 research projects and institutions as shown in Table 6.

Table 7 recaps references providing detailed evaluation criteria for the derived evaluation index, and the final preliminary evaluation index derived is presented in 2 dimensions, 10 major categories, 33 sub-categories, and 47 detailed criteria, respectively. The institution's own preliminary reliability index dimension is divided into 4 major categories; institutional authority, data collection and construction, data provision, and data suitability, while 14 sub-categories and 23 detailed evaluation criteria are presented. Additionally, the institution provided service and system reliability evaluation index dimensions divided into 6 major categories of information around quality, appropriateness (recency), accessibility, tangibility, form and cooperation, alongside 19 sub-categories and 24 detailed evaluation criteria. The reliability of the institutional information provided was evaluated for a total of 200 points, with 100 points for each dimension, respectively.

4.3 Acceptance and application of opinions through expert meeting

In this study, experts in the humanities and social sciences were interviewed, and the final evaluation index was derived.

Reviewing the opinions of the institution's own reliability evaluation indexes, it discovered where the necessary items were placed overall. Yet responses claimed that if the criteria of evaluation were ambiguous, it was necessary to adjust the allocation point. In addition, since this institution has the purpose of providing support for the research of all scholars and promoting the continuing development of scholarship through research achievements, it responded that it needed a reliability evaluation based on the opinions of the scholars including the new scholars, rather than the opinions of a limited number of existing authorities. The opinions on the institution providing service and system reliability evaluation indexes was also evaluated in order for necessary items to be in place. However, within the limitations of quantitative evaluation for the academic nature of the humanities and social sciences alone, it was suggested that reasonable criteria and alternatives for qualitative evaluation are needed.

In the case of the institutions’ own preliminary reliability evaluation index, items of ambiguous criteria were deleted based on expert opinions, and those of the same concepts were integrated, and important items were separated. In addition, the reference scores were either upward or downward reflecting the opinions around levels of importance. The reputation of the institutions’ own preliminary reliability evaluation index was deleted for integration with the authoritative items, and the quantitative evaluation of the number of data retained was classified according to the data type, and the scores were raised.

The institution providing service and system preliminary reliability evaluation indexes raised the score for information quality under the first classification and also lowered the aspect of tangibility. The second category was revised with the latest update, among others, and the convenience of information search and access was deleted in terms of the ambiguity of the items and duplication of user friendliness. These details are summarized in Table 8.

4.4 Derivation of the final reliability evaluation index for the institution retained information resources

The final reliability evaluation index for institutions reflecting previous research, case studies, and expert opinions consists of 2 dimensions, 10 major categories, 30 sub-categories, and 47 detailed items, and also consisted of 100 points for each dimension for a total of 200 points, respectively.

Table 9 recaps the final institutions’ own reliability evaluation index, which is comprised of 4 major categories, 13 sub-categories, and 24 detailed items. It is also comprised of 25 points for institutional authority, 25 points for data collection and construction, 30 points for data provision, and 20 points for data suitability, for a total of 100 points, respectively.

Table 10 recaps the final reliability evaluation index for the institution provided service and system, consisting of 6 major categories, 18 sub-categories, and 23 detailed items, and is comprised of 25 points for information quality, 15 points for appropriateness (recency), 15 points for accessibility, 20 points for tangibility, 15 points for form, and 10 points for cooperation, for a total of 100 points, respectively.

4.5 Application of the final reliability evaluation index for the institution’s retained information resources

The institution’s retained data reliability index developed through this study was applied to 6 related institutions such as; Korean Research Memory (KRM), Humanities Korea (HK), Korean Studies Promotion Service’s Achievement Portal (KSPS), Research Information Service System (RISS) of the Korea Education & Research Information Services (KERIS), National Knowledge Information System (NKIS), and Public Data Portal (DATA), among others, to evaluate their utility. Two internal researchers and 8 external researchers visited each institution's website, then directly used and evaluated it, while the reliability of the institution retained resources was evaluated through the researchers’ averages.

The reliability evaluation of this study consisted of 100 points for the institution's own reliability evaluation, 100 points for the institution provided service and system reliability evaluation. However, since there were items needed for the institutions’ internal data, they were based on 64 points for the evaluation of the institutions’ own reliability, and 83 points for the evaluation of the institution provided service and system reliability.

The results of the institutions’ own reliability evaluation for 6 institutions subject to evaluation are illustrated in Table 11 below. The institutions’ own reliability evaluation items had a total of 64 points, out of which KRM had 62 points out of 64 points being the highest, gaining perfect scores for most items except for the evaluation items through the institutions’ internal data. The remaining 5 institutions excluding KRM demonstrated a similar level of the institutions’ own reliability, ranging from 56 to 58.5 points, respectively.

The institution provided service and system reliability evaluation items that had a total of 83 points, and the results of the service and system reliability evaluation are recapped in Table 12 below. Out of a total of 6 institutions, the Research Information Service System (RISS) of the Korea Education & Research Information Service (KERIS) demonstrated 81 points out of 83 points, which was a significantly high reliability for the service and system, followed by the National Knowledge Information System (NKIS) with 78 points, respectively. Whereas, unlike the evaluation of the institutions’ own reliability indexes, the Korean Research Memory (KRM) earned very low scores across the board in such areas as the update of recent information dimension (3.5 / 10 points), the search for information dimension (5.5 / 10 points), the convenience of use dimension (1.5 / 5 points), the interface dimension (4 / 8 points), and the statistics of use dimension (0 / 3 points), among others, for a total of 56 out of 83 points, respectively.

As a result of evaluating the reliability of the institution retained resources through the evaluation index of this study, the Research Information Service System (RISS) of the Korea Education & Research Information Service (KERIS) demonstrated the highest reliability with 139 points, followed by the National Knowledge Information System Portal (NKIS), and the Korean Studies Promotion Service’s Achievement Portal (KSPS). Whereas, the Korean Research Memory (KRM) turned out to have the lowest reliability with 118 points, alongside Humanities Korea (120 points) and Public Data Portal (122 points), respectively. In the case of KRM, it earned the highest score among the 6 institutions for the institutions’ own reliability evaluation, however, earning the lowest for the institution provided service and system evaluation, respectively.

5. Discussion

The development of information technology has brought about tremendous growth in the development of information resources, and the institutions recognizing the importance of information resources have been established to collect, preserve and provide information resources. Yet the question of the reliability of information resources retained by each institution has continuously been raised. In this light, it has become necessary to develop an index that can help to objectively measure the reliability of the information resources retained by each institution, and accordingly in this study, we have attempted to develop an evaluation index to determine the reliability of the resources retained by the institutions retaining information resources.

To this end, we have derived an applicable evaluation index in the humanities assets dimension through previous research and case studies, and expert advice, among others. In addition, we have applied the evaluation index derived to 6 information service institutions that represent Korea including the Korean Research Memory (KRM) which carries many humanities assets.

The reliability evaluation index for the information resources presented in this study is not an evaluation of the reliability of the information resources retained by each institution, but the approach made to evaluate the integrated reliability of the information resources retained by each institution. The evaluation index presented was designed to evaluate the collective reliability of the institutions themselves, across the quantitative aspects of the data retained by the individual institutions. In addition, the authority of the individual institutions, the system for data collection, and the suitability of the data, with the service quality of the information resources and the interface with the information provision system is included for development. For these and other reasons, even though the information resources retained by the individual institutions may be excellent in certain areas, the reliability of the overall institution retained information resources may be relatively low. This is because this study aimed to develop an evaluation index based on the need for establishing a single network and providing integrated services, along with the increase of institutions retaining humanities assets. Accordingly, through the evaluation index presented in this study, it is possible to evaluate the reliability of the information resources retained by each institution by collectively applying it to all institutions such as government agencies, private institutions and university institutions retaining humanities assets, based on which we could lay out the groundwork for the integrated network construction for humanities assets.

In this study, we have developed a reliability evaluation index for institutions’ own information resources through various methodologies. Nevertheless, we have had limitations with the evaluation items and in applying the evaluation items. It was necessary for us to develop perfect evaluation items in the development and application of the evaluation index, and to apply the evaluation index to government agencies, private institutions, and university research institutes related to the humanities society. However, the following limitations have been left behind within the restricted term of research and broadness in the scope of this research, which must be complemented by subsequent studies.

In terms of evaluation items, there are ambiguities about the evaluation criteria such as reputation, authority, and quality standards in the newly developed evaluation index, and so, it should be set explicitly through additional studies. There are limitations in the aspect of application of the evaluation items.

First, this study tried to apply the evaluation items to all institutions. However, in the case of the evaluation index presented, there were many evaluation items to be evaluated through the internal data of each institution, and so the utility of the evaluation index was verified by applying to only 6 representative institutions. Second, in applying the evaluation index developed in this study to government agencies, private institutions and university research institutes in the future, the criteria of application for each institute should be applied differently with recognition of the need for differentiation, not in a uniform manner reflecting differences between the institutions. Therefore, it is necessary to establish specific criteria for the evaluation items in the evaluation index developed in this study, and in applying the evaluation items, additional research is needed to evaluate government agencies, private institutions and university research institutes via the collection method for internal data and classification of the evaluation index for each research institution. Furthermore, in setting the scope for humanities assets, in the case of this paper, case studies were performed within the scope of traditional humanities. However, in the case of humanities assets, the scope has been expanded to include culture and the arts as part of the scope of humanities assets. In addition, there is a growing trend to include even the subject of convergence research in the scope of humanities assets. Accordingly, subsequent research is needed to expand the scope of humanities assets’ collection and institutional connection.

6. Conclusion

The Humanities were the most important curriculum from medieval universities in the West. However, education centering on the humanities flourished only briefly during the 19th century centered in Germany. For the past two decades, the evaluation system for the humanities has been developed, and the humanities has been forced to voluntarily discard the methodological traditions of the humanities of the past (Park, 2014). Notwithstanding, with the recently growing interest in the humanities, the importance of information services for the humanities has increased, and the number of institutions that collect and provide such information has also increased incrementally. In this light and in this study, we have attempted to evaluate the reliability of institutions retaining diverse information resources based on the humanities.

The characteristics of the reliability evaluation index developed in this study are such that it is based on the overall trust level of the institution rather than on the individual data unit in evaluating the reliability of the information resource of the institution. Each evaluation item was set to reflect the specificities of humanities assets such that the dimension of recency securing appropriateness rather than simple recency, and towards a higher significance of published books over SCI papers, and efforts were made to measure the reliability of the information resources of the institutions retaining humanities assets.

As noted earlier, the reliability presented in the reliability evaluation of this study was developed as an index for evaluating the overall reliability level of the resources retained by the institutions under the premise that it is a cooperative network between institutions retaining humanities assets information resources. Accordingly, it is possible to yield the results conversely to the reliability of the individual information resources retained by each institution. However, in building a cooperative network between institutions, since the overall reliability of the information resources retained by each institution is far more important than the information resources of the developmental unit, the evaluation index of this study carries significant meaning on its own.

Acknowledgments

This work is based on results of the Policy Research Service Task, ‘Policy Research 2017-57’ supported by the National Research Foundation of Korea(NRF) and it could differ from the NRF's official view.

References

- Berkeley Library, ([n.d.]), Berkeley University Library Evaluating Resources, Retrieved from http://guides.lib.berkeley.edu/evaluating-resources#authority.

- Centra, J. A., (1977), How universities evaluate faculty performance: A survey of department heads, Retrieved from https://www.ets.org/Media/Research/pdf/GREB-75-05BR.pdf.

-

Chung, Y. K., & Choi, Y. K., (2011), A Study on Faculty Evaluation of Research Achievements in Humanities and Social Sciences, Journal of Information management, 42(3), p211-233.

[https://doi.org/10.1633/jim.2011.42.3.211]

-

Dunsmore, C., (2002), A qualitative study of web-mounted pathfinders created by academic business libraries, Libri, 52(3), p137-156.

[https://doi.org/10.1515/libr.2002.137]

-

Finkenstaedt, T., (1990), Measuring research performance in the humanities, Scientometrics, 19(5-6), p409-417.

[https://doi.org/10.1007/bf02020703]

- Fogg, B. J., Kameda, T., Boyd, J., Marshall, J., Sethi, R., Sockol, M., & Trowbridge, T., (2002), Stanford-Makovsky web credibility study 2002: Investigating what makes web sites credible today, Report from the Persuasive Technology Lab, Retrieved from http://credibility.stanford.edu/pdf/Stanford-MakovskyWebCredStudy2002-prelim.pdf.

- Fogg, B. J., Soohoo, C., Danielson, D. R., Marable, L., Stanford, J., & Tauber, E. R., (2003, June), How do users evaluate the credibility of Web sites?: a study with over 2,500 participants, In Proceedings of the 2003 conference on Designing for user experiences, p1-15, ACM.

- Fogg, B. J., & Tseng, H., (1999, May), The elements of computer credibility, In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, p80-87, ACM, Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.83.8354&rep=rep1&type=pdf.

- Gang, J. Y., Nam, Y. H., & Oh, H. J., (2017), An Evaluation of Web-Based Research Records Archival Information Services and Recommendations for Their Improvement: NTIS vs. NKIS, Journal of Korean Society of Archives and Records Management, 17(3), p139-160.

- Georgetown Library, ([n.d.]), Georgetown University Library Evaluating Internet Resources, Retrieved from https://www.library.georgetown.edu/tutorials/research-guides/evaluating-internet-content.

- Jackson, R., & Pellack, L. J., (2004), Internet subject guides in academic libraries: An analysis of contents, practices, and opinions, Reference & User Services Quarterly, 43(4), p319-327.

-

Jackson, R., & Stacy-Bates, K. K., (2016), The enduring landscape of online subject research guides, Reference & User Services Quarterly, 55(3), p212.

[https://doi.org/10.5860/rusq.55n3.219]

-

Jauch, L. R., & Glueck, W. F., (1975), Evaluation of university professors' research performance, Management Science, 22(1), p66-75.

[https://doi.org/10.1287/mnsc.22.1.66]

-

Jeong, W. C., & Rieh, H. Y., (2016), Users' Evaluation of Information Services in University Archives, Journal of Korean Society of Archives and Records Management, 16(1), p195-221.

[https://doi.org/10.14404/jksarm.2016.16.1.195]

- Johns Hopkins Libraries, ([n.d.]), Johns Hopkins University Libraries Evaluating Your Sources, Retrieved from https://guides.library.oregonstate.edu/c.php?g=286235&p=1906707.

- Kang, H. I., & Jeong, Y. I., (2002), Measuring Library Online Service Quality: An Application of e-LibQual, Journal of the Korean Society for Information Management, 19(3), p237-261.

- Keum, J. D., & Weon, J. H., (2012), Establishing control system for the credibility of performance information, The Journal of Korean Policy Studies, 12(1), p59-75.

- Kim, D. N., Lee, M. H., & Park, T. G., (2006), Constructing an Evaluation Model for the Professors Academic Achievement in the Humanities, Journal of Educational Evaluation, 19(3), p1-20.

- Kim, H. S., Shin, K. J., & Choi, H. Y., (2007), A Study on the Development of Evaluation Framework for Public Portal Information Services, Proceedings of the Korea Contents Association Conference, 5(1), p440-444.

-

Kim, S., (2012), A Study on the Current State of Online Subject Guides in Academic Libraries, Journal of the Korean Society for information Management, 29(4), p165-189.

[https://doi.org/10.3743/kosim.2012.29.4.165]

- Kim, Y. K., (2007a), How Do People Evaluate a Web Sites Credibility, Journal of Korean Library and Information Science Society, 38(3), p53-72.

-

Kim, Y. K., (2007b), A Study on the Influence of Factors That Makes Web Sites Credible, Journal of the Korean Society for Library and Information Science, 41(4), p93-111.

[https://doi.org/10.4275/kslis.2007.41.4.093]

- Kim, Y. K., (2011), Comparative Study on Criteria for Evaluation of Internet Information, The Journal of Humanities, 27, p87-109.

- Lee, I. Y., (2005), A Study on the Evaluation System of Research Institutes, The Journal of Educational Administration, 23(4), p343-364.

- Lee, L. J., (2016), A Study on the Improvement Strategies of Moral Education Using Humanities, Doctoral dissertation, Seoul National University, Seoul, Korea.

- Lee, Y. H., (2017), A New Evaluating System for Academic Books on Humanities and Social Sciences in Korea, Journal of the Korea Contents Association, 17(3), p624-632.

- Moed, H. F., (2008, December), Research assessment in social sciences and humanities, In ECOOM Colloquium Antwerp, Retrieved from https://www.ecoom.be/sites/ecoom.be/files/downloads/1%20Lecture%20Moed%20Ecoom%20Antwerp%209%20Dec%202011%20SSH%20aangepast%20(2).pdf.

-

Noh, Y., & Jeong, D., (2017), A Study to Develop and Apply Evaluation Factors for Subject Guides in South Korea, The Journal of Academic Librarianship, 43(5), p423-433.

[https://doi.org/10.1016/j.acalib.2017.02.002]

- Oregon Libraries, ([n.d.]), University of Oregon Libraries Guidelines for evaluating sources, Retrieved from http://diy.library.oregonstate.edu/guidelines-evaluating-sources.

- Park, C. K., (2014), Evaluation in the Humanities: A Humanist Perspective, In/Outside, 37, p84-109.

- Park, N. G., (2006), Analysis of the evaluation status of teaching professions by university and development of assessment model of teaching achievement, Seoul, Ministry of Education & Human Resources Development.

- Skolnik, M., (2000), Does counting publications provide any useful information about academic performance?, Teacher Education Quarterly, 27(2), p15-25.

- Song, H. H., (2011), Problems on current humanities journal assessment system and the alternatives, Studies of Korean & Chinese Humanities, 34, p457-481.

- Standler, B. R., (2004), Evaluation Credibility of Information on the Internet, Retrieved from http://www.rbs0.com/credible.pdf.

- University of Queensland Library, ([n.d.]), UQ Library Evaluate Information You Find, Retrieved from https://web.library.uq.edu.au/research-tools-techniques/search-techniques/evaluate-information-you-find.

- Woo, B. K., Jeon, I. D., & Kim, S. S., (2006), The Effects of the Academic Research Evaluation System and the Research Achievements in Developed Countries, ICASE Magazine, 12(4), p21-32.

- Yoon, S. W., (1996), Reliability Analysis, Seoul, Jayu academy.

Daekeun Jeong has an M.A. and a PhD in Library & Information Science from Chonnam National University, Gwangju. He has published 2 book, and 30 articles. He is the director of the Institute of Economic and Cultural in THEHAM. and he teaches courses in Information Policy, DataBase in Theory, Information Systems Analysis, School Library Management in the Department of Library& Information Science, Chonnam National University. Before that, he taught courses in How to Use Library Information Materials, Indexing and Abstracting in Theory in the Department of Library & Information Science, Chonbuk National University. He worked at Chonnam National University Library and Konkuk University Institute of Knowledge Content Development & Technology.

Younghee Noh has an MA and PhD In Library and Information Science from Yonsei University, Seoul. She has published more than 50 books, including 3 books awarded as Outstanding AcademicBooks by Ministry of Culture, Sports and Tourism (Government) and more than 120 papers, including one selected as a Featured Article by the Informed Librarian Online in February 2012. She was listed in the Marquis Who’s Who in the World in 2012-2016 and Who’s Who in Science and Engineering in 2016-2017. She received research excellence awards from both Konkuk University (2009) and Konkuk University Alumni (2013) as well as recognition by “the award for Teaching Excellence” from Konkuk University in 2014. She received research excellence awards form ‘Korean Library and Information Science Society’ in 2014. One of the books she published in 2014, was selected as ‘Outstanding Academic Books’ by Ministry of Culture, Sports and Tourism in 2015. She received the Awards for Professional Excellence as Asia Library Leaders from Satija Research Foundation in Library and Information Science (India) in 2014. She has been a Chief Editor of World Research Journal of Library and Information Science in Mar 2013~ Feb 2016. Since 2004, she has been a Professor in the Department of Library and Information Science at Konkuk University, where she teaches courses in Metadata, Digital Libraries, Processing of Internet Information Resources, and Digital Contents.